New month, new update for Home Assistant! And this month is very clearly marked by artificial intelligence, allowing you to control your entire home. Not only with Assist, Home Assistant's built-in assistant, but also via OpenAI (ChatGPT) and Google AI! The new features don't stop there, however: we also have super-simple media player controls, further improvements to data tables, tag entities, collapsible map sections, and much more!

Voice Assistants and AI

The brain of the voice assistant is called a “conversational agent.” It is responsible for understanding the intent behind the voice command, performing an action, and generating a response.

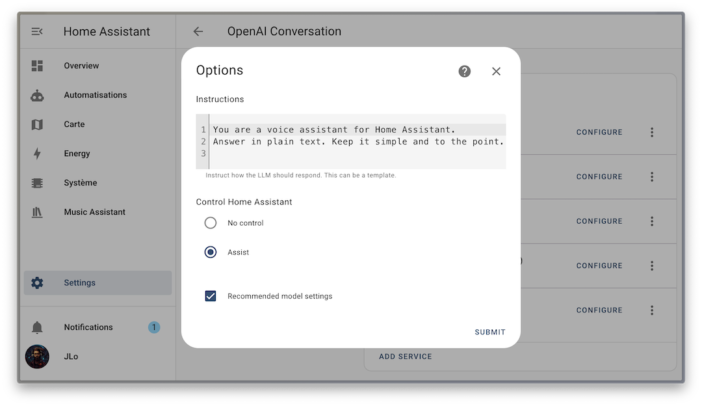

From the beginning, Home Assistant has allowed the replacement of its conversational agent with an agent based on LLMs. But until now, these two worlds (the Home Assistant chatbot controlling your home and LLM-based chatbots) didn't overlap: it was impossible to control your home from an LLM-based chatbot. You had to go through one or the other, which was cumbersome. Well, that's now solved! When setting up an LLM-based chatbot, you can decide whether your chatbot controls your home with a single button press.

With this new setting, LLM-based chatbots can leverage our intent system, which powers the assistant. They also have access to all entities exposed to the assistant. This way, you control what your agents have access to.

Using the intent system is very interesting because it works right out of the box. LLM-based chatbots can do everything Assist can. The added benefit is that they can reason beyond words, something Assist couldn't.

For example, if you have a light called “Webcam Light” exposed in your “office” area, you can give direct commands such as:

Turn on the office webcam light.

This previously worked with Assist, but you can also give more complex commands, such as:

I'm going to a meeting, can you make sure people see my face?

The chatbot will determine the intent behind the words and invoke the correct intent on the corresponding exposed entities.

This release is available for both OpenAI and Google AI integrations. To make LLMs easier to get started with, the developers have updated them with recommended model parameters that strike a good balance between accuracy, speed, and cost. The recommended settings perform just as well for voice assistant-related tasks. Google is 14 times cheaper than OpenAI, but OpenAI is better at answering non-smart home questions. Local LLMs are also supported via Ollama integration since Home Assistant 2024.4. Ollama and the main open-source LLM models aren't tuned for tool invocation, so this must be built from scratch. Simplified Media Player Controls This update introduces new intents for media players that let you do more with less. You can say the following voice commands to control media players located in the same area as your Assist device:Pause

Resume

NextSet volume to 50% Until now, Home Assistant only offered phrases targeting a specific entity by name. Because of this limitation, these intents weren't the most user-friendly, as the phrases were too long to say, “Skip to the next song on the living room TV.”

So the developers added two features to make the phrases as short as possible. Context awareness allows the voice assistant to recognize devices in the same area as the satellite. They also created a smart matching strategy that finds the right media player to target.

For example, if you say “pause,” the voice assistant will automatically target the currently playing media player. Just make sure your voice assistant is assigned to a zone where there's a media player, and you're good to go! Commands become much more natural here.

- Dashboard Customization

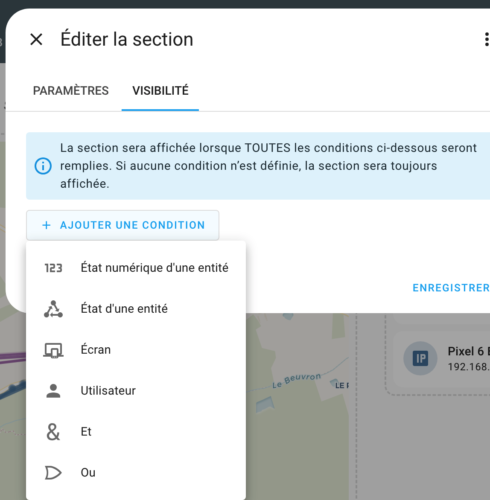

- The customization and organization of dashboards is still ongoing after the latest updates. This month, a new feature allows you to conditionally display certain parts of the dashboard section. This new feature allows you to hide or display a section based on certain conditions you decide.

- For example, you might want to only display a section on mobile when you're at home. Or, you might want to only display the switch to turn off the kitchen lights when they're on. Perhaps you have a section that only affects you or your partner and you want to hide it from the kids?

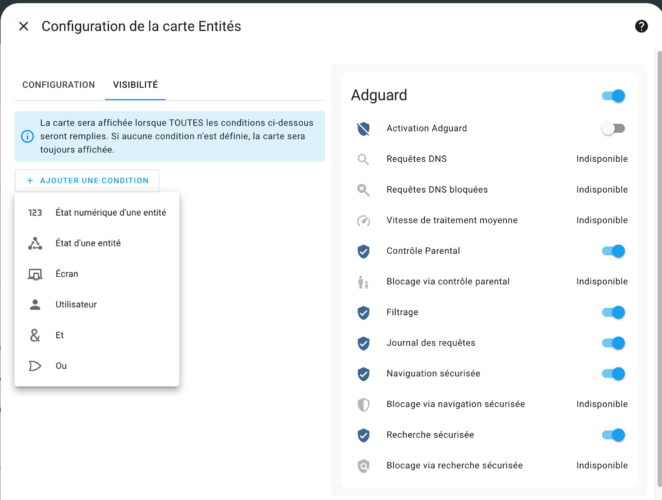

- This same visibility feature is now available for maps!

You can now hide or show a map based on certain conditions. This allows you to create more dynamic dashboards that adapt to your needs. The big difference is that you don't need a condition map to use this feature. It's available directly in the map configuration, in the Visibility tab!

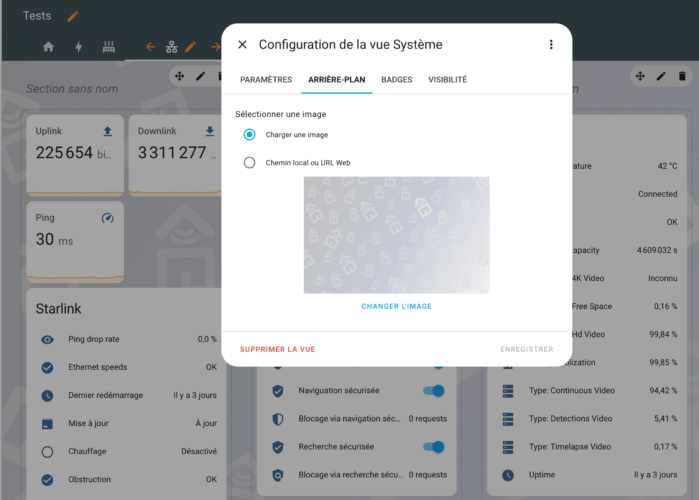

Another new feature in the dashboard interface: the ability to set a background image! Previously, this was only possible by editing YAML, but now you can do it directly from the user interface. Better yet, you can upload an image directly from your computer or provide an image URL!

Improved Data Tables

It is now possible to collapse and expand all groups at once.

In addition, the filters you set in data tables are now saved in your browsing session. This means that if you leave the page and return, your filters will still be present. Each browser tab or window has its own session, meaning you can have different filters in different tabs or windows that are remembered for that specific tab or window.

Integrations

New integrations are coming:

Airgradient

: Provides air quality data from your local Airgradient device.

APsystems

: Monitors your APsystems EZ1 microinverters, such as the one used in the Solaris Go solar station!

Azure Data Explorer

: Transfers Home Assistant events to Azure Data Explorer for analysis.

IMGW-PIB

- : Hydrological data from the Institute of Meteorology and Water Management – National Research Institute provides information on rivers and water reservoirs in Poland.Smart Storage Acceleration

- : The Intelligent Storage Acceleration Library (ISAL) is used to accelerate the Home Assistant frontend. It is automatically enabled.Monzo : Connect your Monzo bank account to Home Assistant and get information about your account balance. Matter Updates to Version 1.3

- As we recently saw,Matter has updated to version 1.3. Home Assistant has already adapted to this update, which improves the reliability and compatibility of Matter devices.

- This version also improves and expands device support. Matter-based air purifiers and room air conditioners are now supported, and thermostats and other climate devices have received significant fixes around setpoints.A few Matter devices have what are called “custom clusters.” Custom clusters are a standardized way for manufacturers to include non-standardized data. Home Assistant wants to support custom clusters, but developers must add support for each one individually.

- This release allows Home Assistant to be notified when data in a custom cluster changes (instead of requesting it at regular intervals). This will reduce network traffic and improve the performance of your Matter network, especially on large Thread networks.Tags are becoming entities!

- Home Assistant has long supported tags. The tags feature allowed you to use NFC tags or QR codes with Home Assistant, for example, to trigger automations.However, tags weren't real entities and therefore weren't always logical to use. With this release, tags are now normal entities and can be used in automations, scripts, templates, and even added to your dashboards!

You can find all the new features in the official blog post.

If you prefer to quickly discover the new features via video, I also invite you to follow Howmation, which has planned a short debriefing video for each upcoming update: https://www.youtube.com/watch?v=2ZlEkiQSfrkThere you go! Have fun with all these new features! I'm going back ;-)

Please remain courteous: a hello and a thank you cost nothing! We're here to exchange ideas in a constructive way. Trolls will be deleted.